Resources

For more information about our projects, team, and publications please navigate to our Improving Rescue homepage:

The cognitive biases and strategies identified in Turning Points are adapted from the Ottawa M&M Model and the work of Pat Croskerry and can be referenced in the literature below:

- Diagnostic Failure: A Cognitive and Affective Approach

- The Importance of Cognitive Errors in Diagnosis and Strategies to Minimize Them

- Profiles in Patient Safety: A "Perfect Storm" in the Emergency Department

- Cognitive Debiasing 1: Origins of Bias and Theory of Debiasing

Cognitive Biases That May Lead to Diagnostic Error

Error of over-attachment to a particular diagnosis

Anchoring

The tendency to perceptually lock on to salient features in the patient's initial presentation too early in the diagnostic process and failing to adjust this initial impression in the light of later information. This Cognitive Disposition to Respond (CDR) might be severely compounded by the confirmation bias.

Confirmation bias

The tendency to look for confirming evidence to support a diagnosis rather than look for disconfirming evidence to refute it, despite the latter being more persuasive and definitive.

Premature closure

A powerful CDR accounting for a high proportion of missed diagnoses. It is the tendency to apply premature closure to the decision-making process, accepting a diagnosis before it has been fully verified. The consequences of the bias are reflected in the maxim: "When the diagnosis is made, the thinking stops."

Error due to failure to consider alternative diagnoses

Multiple alternatives bias

A multiplicity of options on a differential diagnosis might lead to significant conflict and uncertainty. The process might be simplified by reverting to a smaller subset with which the physician is familiar, but might result in inadequate consideration of other possibilities. One such strategy is the three-diagnosis differential: “it is probably A, but it might be B, or I don’t know (C).” Although this approach has some heuristic value, if the disease falls in the C category and is not pursued adequately, it minimizes the chance that serious diagnoses are made.

Representativeness restraints

Drive the diagnostician toward looking for prototypical manifestations of disease "looks like a duck, walks like a duck, quacks like a duck, then it is a duck”. Yet, restraining decision making along these pattern recognition lines leads to atypical variants being missed.

Search satisficing

Reflects the universal tendency to call off a search once something is found. Co-morbidities, second foreign bodies, other fractures, and co-ingestants in poisoning may all be missed.

Error due to inheriting someone else’s thinking

Diagnosis momentum

Once diagnostic labels are attached to patients they tend to become stickier and stickier. Through intermediaries (patients, paramedics, nurses, physicians) what might have started as a possibility gathers increasing momentum until it becomes definite, and all other possibilities are excluded.

Framing effect

How diagnosticians see things might be strongly influenced by the way in which the problem is framed, e.g. physicians’ perceptions of risk to the patient may be strongly influenced by whether the outcome is expressed in terms of the possibility that the patient might die or might live. In terms of diagnosis, physicians should be aware of how patients, nurses, and other physicians frame potential outcomes and contingencies to the clinical problem to them.

Bandwagon effect

The tendency for people to believe and do certain things because many others are doing so. Group-think is an example, and it can have a disastrous impact on team decision making and patient care.

Errors in prevalence perception or estimation

Availability bias

The disposition to judge things as being more likely, or frequently occurring, if they readily come to mind. Thus, recent experience with a disease might inflate the likelihood of its being diagnosed. Conversely, if disease has not been seen for a long time (is less available), it might be underdiagnosed.

Base-rate neglect

The tendency to ignore the true prevalence ofa disease, either inflating or reducing its base-ate, and distorting Bayesian reasoning. However, in some cases clinicians might (consciously or otherwise) deliberately inflate the likelihood of disease, such asin the strategy of "rule out worst-case scenario to avoid missing a rare but significant diagnosis.

Hindsight bias

Knowing the outcome might profoundly influence perception of past events and prevent realistic appraisal of what actually occurred. In the context of diagnostic error, it may ‘compromise learning through either an underestimation (illusion of failure) or overestimation (illusion of control) of the decision maker's abilities.

Errors involving patient characteristics or presentation context

Fundamental attribution error

The tendency to be judgmental and blame patients for their illnesses (dispositional causes) rather than examine the circumstances (situational factors) that might have been responsible. In particular, psychiatric patients, minorities, and other marginalized groups tend to suffer from this CDR. Cultural differences exist in terms of the respective weight attributed to dispositional and situational causes.

Triage cueing

The triage process occurs throughout the heath care system, from the self-triage of patients to the selection of a specialist by the referring physician. In the emergency department, triage is a formal process that results in patients being sent in particular directions which cues their subsequent management. Many CDRs are initiated at triage, leading to the maxim: "Geography is destiny.” Once a patient is referred to a specific discipline, the bias within that discipline to look at the patient only from their own perspective is referred to as deformation professionnelle.

Yin-yang out

When patients have been subjected to exhaustive and unavailing diagnostic investigations, they are said to have been worked up the yin-yang. The yin- yang outs the tendency to believe that nothing further can be done to throw light on the dark place where, and if, any definitive diagnosis resides for the patient, ie, the physician islet out of further diagnostic effort. This might prove ultimately to be true, but to adopt the strategy at the outset is fraught with the chance of a variety of error.

Errors associated with physician affect, personality, or decision style

Commission bias

Results from the obligation toward beneficence, in that harm to the patient and only be prevented by active intervention. Its the tendency toward action rather than inaction. It is more likely in overconfident physicians. Commission bias is less common than omission bias.

Omission bias

The tendency toward inaction and rooted in the principle of non- maleficence. In hindsight, event that have occurred through the natural progression of a disease are more ‘acceptable than those that may be attributed directly to the action of the physician, The bias might be sustained by the reinforcement often associated with not doing anything, but it may prove disastrous. Omission biases typically outnumber commission biases.

Outcome bias

The tendency to opt for diagnostic decisions that will lead to good outcomes, rather than those associated with bad outcomes, thereby avoiding chagrin associated with the later. tis a form of value bias in that physicians might express a stronger likelihood in their decision-making for what they hope will happen rather than for what they really believe might happen. This may result in serious diagnoses being minimized.

Overconfidence / underconfidence

A universal tendency to believe we know more than we do, (Overconfidence reflects 2 tendency to act on incomplete information, intuitions, or hunches ‘Too much faith is placed in opinion instead of carefully gathered evidence.

Zebra retreat

Occurs when a rare diagnosis (zebra) figures prominently on the differential diagnosis but the physician retreats from it for various reasons: perceived inertia in the system and barriers to obtaining special or costly tests; self-consciousness and underconfidence about entertaining a remote and unusual diagnosis and gaining a reputation for being esoteric; the {fear of being seen as unrealistic and wasteful of resources; under or overestimating the base- rate for the diagnosis; the ED might be very busy and the anticipated time and effort to pursue the diagnosis might dilute the physician's conviction; team members may exert coercive pressure to avoid wasting the team’s time; inconvenience of the time of day or weekend and difficulty getting access to specialists; unfamiliarity with the diagnosis might make the physician less likely to go down an unfamiliar road; fatigue or other distractions may tip the physician toward retreat.

Adapted from Campbell SG, Croskerry P, Bond WF. Profiles in patient safety: a "perfect storm" in the emergency department. AcadEmerg Med. 2007; 14:743-749. Used in the Ottawa M&M Model Guide

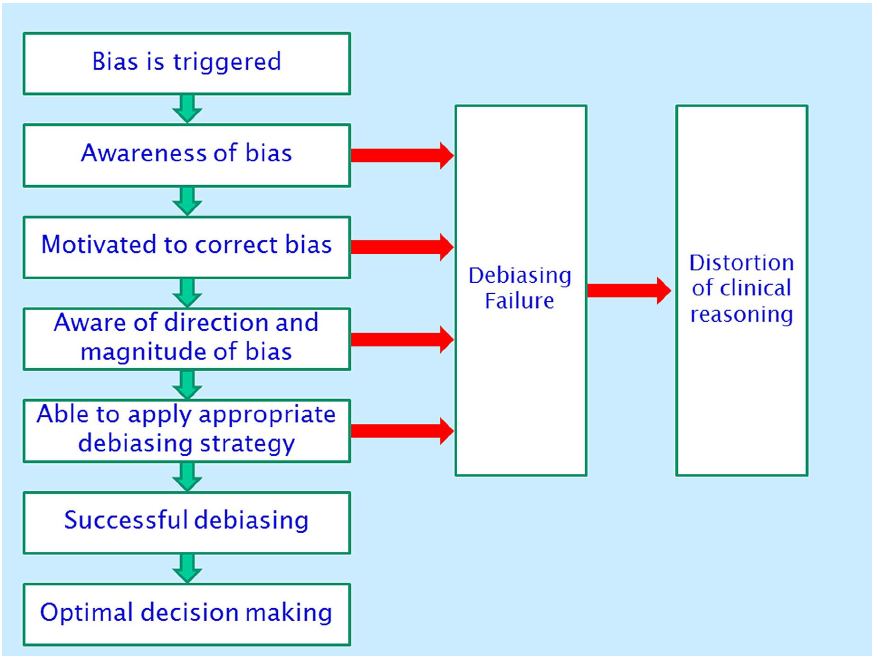

Successive Steps in Cognitive Debiasing

High-risk Situations for Biased Reasoning

| High-risk situation | Potential biases |

|---|---|

| 1. Was this patient handed off to me from a previous shift? | Diagnosis momentum, framing |

| 2. Was the diagnosis suggested to me by the patient, nurse or another physician? | Premature closure, framing bias |

| 3. Did I just accept the first diagnosis that came to mind? | Anchoring, availability, search satisficing, premature closure |

| 4. Did I consider other organ systems besides the obvious one? | Anchoring, search satisficing, premature closure |

| 5. Is this a patient I don’t like, or like too much, for some reason? | Affective bias |

| 6. Have I been interrupted or distracted while evaluating this patient? | All biases |

| 7. Am I feeling fatigued right now? | All biases |

| 8. Did I sleep poorly last night? | All biases |

| 9. Am I cognitively overloaded or overextended right now? | All biases |

| 10. Am I stereotyping this patient? | Representative bias, affective bias, anchoring, fundamental attribution error, psych out error |

| 11. Have I effectively ruled out must-not-miss diagnoses? | Overconfidence, anchoring, confirmation bias |

Adapted from Graber:³⁴ General checklist for AHRQ project. A description of specific biases can be found in Croskerry. ⁷

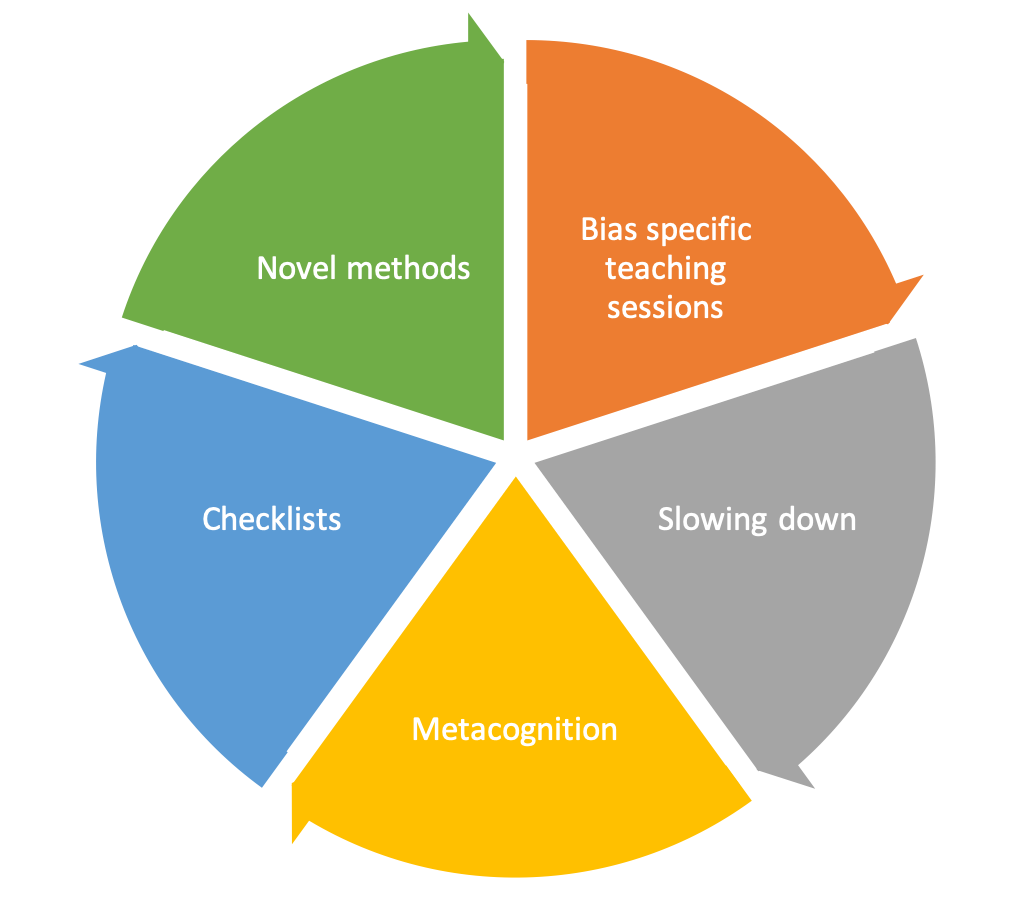

Cognitive Debiasing Strategies to Reduce Diagnostic Error

| Strategy | Mechanism / action |

|---|---|

| Develop insight / awareness | Provide detailed descriptions and thorough characterizations of known CDRs and ADRs together with multiple clinical examples illustrating their adverse effects on decisionmaking and diagnosis formulation. |

| Consider alternatives | Establish forced consideration of alternative possibilities, e.g., the generation and working through of a differential diagnosis. Encourage routinely asking the question: What else might this be? |

| Heighten metacognition | Train for a reflective approach to problem-solving: stepping back from the immediate problem to examine and reflect on the thinking and affective process. |

| Develop cognitive forcing strategies | Develop generic and specific strategies to avoid predictable CDRs and ADRS in particular clinical situations. |

| Provide specific training | Identify specific flaws and biases in thinking and provide directed training to overcome them: e.g., instruction in fundamental rules of probability, distinguishing correlation from causation, basic Bayesian probability theory. |

| Provide simulation training | Develop mental rehearsal, "cognitive walkthrough" strategies for specific clinical scenarios to allow CDRs and ADRs to be made and their consequences to be observed. Construct new scenarios or clinical training videos contrasting incorrect (biased) approaches with the correct (debiased) approach. |

In addition to cognitive error in practice, it is important to identify additional factors and contributors that may affect clinical decision making.

Cognitive Issue

A specific pitfall in clinical decision making.

Examples

- Incomplete/mis-information

- Inaccurate/misinterpretation

- Perceptual errors

- Suboptimal weighing/prioritizing of diagnostic probabilities

- Cognitive biases, heuristics, logical fallacies

Hospital/System Issue

Hospital-wide contributors

Hospital-wide system or process failures affecting access to care

Examples

Access to patient services, consultants, inpatient beds, specialty treatments

Hospital administration contributors

Institutional functions, policies, guidelines, and regulatory issues affecting access to care

Examples

Budgetary constraints, hospital policies & guidelines

ED-based system issues

A problem beyond the individual clinician or team which pertains to how an emergency department operates

Examples

- Prehospital care (coordination, protocol adherence, lack of needed protocols)

- Triage (accuracy of triage, impact of under-triage, protocol violations, insufficient triage rules)

- Wait times (impact of delays in offloading, triage, registration, awaiting investigations or consults)

- Investigations (availability of diagnostic imaging, timeliness and accuracy of results, follow-up of modified final reports, delay in laboratory testing results, availability of appropriate laboratory testing, follow-up of positive cultures)

- Therapy (availability of medications, medication errors, procedural errors or complications)

- Consultations (communication, delay in response, appropriateness of service, admission algorithm issues, impact of decisions on return ED visits)

- Discharge planning (lack of appropriate follow-up, delay in follow-up, communication issues around follow up or reasons to return)

- Shiftwork (impact of fatigue, timing of decisions relative to when you were on shift (i.e. beginning vs end of shift decisions)

- Crowding (impact of lack of beds, long wait times on decision making)

- Public/occupational health (people other than the patient, including health care workers are placed at risk)

Patient Factors

Any communication barrier (due to language, intoxication, obtunded, critically ill...), behavior eliciting affective bias

Example

-

Genotype, unable to comply, inadequate social support

-

Financial/social barriers, mental health

- Geographic location, Pre-existing medical conditions (history of cardiac conditions), compliance with past treatments

Skill-set Error

Procedural complications or errors in interpretation of ECGs, laboratory or diagnostic imaging tests

Example

Sample mix-up/mislabeled, technical errors/poor processing of specimen/test, erroneous lab/radiol reading of test, erroneous clinician interpretation of test, failure/delay in considering the correct diagnosis

Task-based Error

Failure of routine behaviors such as regular bedside care, attention to vital signs and appropriate monitoring – often reflects work overload

Example

Failure/delay in ordering or performing needed tests, suboptimal test sequencing, ordering of wrong test(s), failed/delayed follow up action on test result, failure to appreciate urgency/acuity of illness, failure/delay in recognizing complication(s), failure/delay in ordering needed referral, suboptimal consultation diagnostic performance, failure to refer to setting for close monitoring, failure/delay in timely follow-up/rechecking of patient

Personal Impairment

Personal factors that impact job performance

Example

-

Fatigue

-

Illness

- Physical

- Emotional, example moral distress

- Mental

-

Substance abuse

-

Social stressors

-

Family issues

Teamwork Failure

Breakdown in communication between team members, across shifts, between teams, and across specialty boundaries or due to inappropriate assignment of unqualified personnel to a given task – this includes resident and student supervision

Example

Failure/delay in eliciting critical piece of history data or critical physical exam finding, failure/delay to follow-up, failed/delayed transmission of result to clinician, inappropriate/unneeded referral, failed/delayed communication/follow-up of consultation

Local ED Environmental Contributors

Local/unit based organizational structures, coordination, and standardization affecting access to care

Example

Appropriate staffing, stocking, functional equipment, sufficient policies & guidelines